The Eight Challenges You’ll Face With On-Premise Artificial Intelligence

As glamorous as it is to have your own Artificial Intelligence Optimized On-Premise Data Center, it doesn’t come easy. It is absolutelly true that if done right it boasts much better performance and much lower costs than resorting to the cloud or even using co-location to perform your processing workload when creating AI driven solutions. However, most people are not aware of what really makes an AI Optimized Data Center and end up building an expensive half-baked solution that can’t perform on par with the hardware specs. Here is a quick list of the top 8 challenges, in our team’s experience, that you’ll face when putting together your very own on-premise Data Center for AI.

1 – The Hardware

Hardware for Machine Learning is more expensive than hardware used for the average problem. And it isn’t just the GPU that makes it more expensive: the combination of high performance processors, memory, bus, and network cards only helps in compounding the costs of these solutions. Which is why the hourly rate for usage of this kind of hardware in the cloud is the highest of them all. After doing some estimates, you’ll quickly realize that the budget needed to host an AI operation in the cloud can be prohibitively expensive. Oh, and I forgot to mention that when you’re operating in the cloud you’ll pay for the instances plus the data movement inside and outside of the network. Take it from NASA that forgot to account for this, and ended up with the prospect of having to add another $30M a year to their budget in order to afford just for data egress charges. And in case you didn’t realize yet, when it comes to AI training, an absurd amount of data movement is par for the course. With a total budget of $95M a year, my guess is that NASA would have been much better off running their own data center.

Aright, you got the picture. Cloud is expensive. Then you look at those Engineers winning Kaggle competitions on their gaming desktops, you look up the prices for that kind of consumer-grade hardware and you realize that it is up to 10x cheaper than server-grade hardware with seemingly similar specifications. By the way, the majority of GPUs we use on server-grade hardware (that costs between $7,000 and $12,000) share the same architecture with gaming GPUs (retailed between $700 and $1200). You dash to your favorite computer store, orders a bunch of these and declare a win. Your new cluster cost as much as what you’d be paying monthly to AWS for what seems to be equivalent capabilities, right?

Not too fast. You’re now ready to face the second challenge on our list.

2 – Getting the Right Hardware

When you look up the specs, a GTX Titan V (retailed at $3,000) is pretty much on par with a Tesla V100 (sold at around $12,000 at the time of launch). Titan V was rated at 110 TeraFLOPS (tensor) and the V100 was rated at 112 TeraFLOPS (tensor). The v100 boasted an extra 4Gb of RAM, but hey… that’s not a big deal!

That’s when you learn that despite all the similarities, these are completely different animals, and only one of them is capable of achieving its full potential in a server environment.

Let’s start with the design. Here is a Titan V:

And here is the Tesla V100 Pci-e equivalent:

Did you notice the difference?

Teslas are designed to work in tandem with other Teslas. That is why Teslas don’t have any fans. They’re intended to be stacked right next to one another and work at full power 24×7. The only thing preventing them from a meltdown is the huge heatsink covering the whole board and the powerful deafening turbine like fans that exist inside server-grade computers.

Take a bunch of Titan Vs, and stick them right next to each other is what you have is a fire hazard. The blower is unable to operate when its blocked by a neighboring card, and that forces you to skip a slot in order to give the blower enough breathing space.

And this is just one random reason why you really shouldn’t try consumer grade hardware for business grade work. We’ll write anothe piece in the future just covering the differences.

You better beef up your fire suppression system!

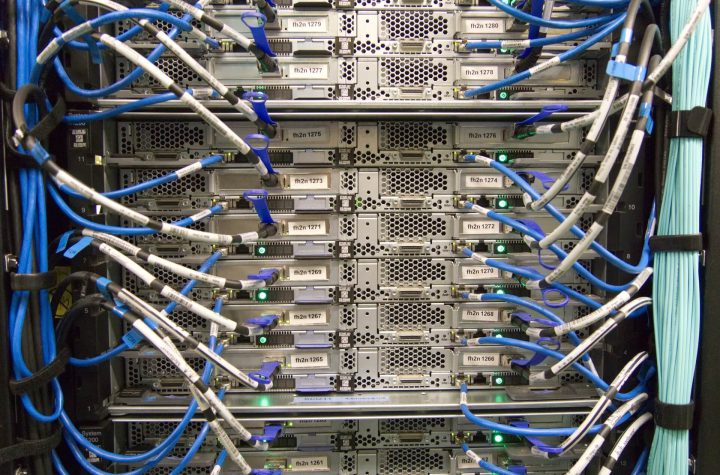

3 – Network

Often overlooked and misunderstood, network plays a huge role in your cluster. Forget about 10 Gbps networks. What? You’re not there yet? Listen to this very carefully: anything less than 10Gbps on an AI cluster can only entertain snmp packets and monitoring systems.

Do you think I’m exagerating? The current GPU architecture (Ampere) sold by Nvidia operates on either Nvlink or PCI-e 4.0. All you need to know for now is that Nvlink is much faster than PCI-e 4.0. Now, let’s take a step down and talk about PCI-e 3.0 which is exactly half as fast as PCI-e 3.0 and was the interface used by the previous architecture (Turing). This interface can transfer data at a rate of 16 Gigabytes Per Second in each direction, which comes up to 128 Gbps.

This means, in theory, that if your network can’t at least match that speed your GPU will be on vacation most of the time while the data slowly moves in for processing.

And here is another problem with consumer-grade hardware: you may be able to find Network Interface Cards faster than 10 Gbps to keep up with your GPU, but you may never get there. Most consumer grade motherboards and CPUs won’t have enough bandwidth to keep up with all your GPUs and NIC at full speed, halving the bandwidth available to all PCI-e cards.

4 – Storage

You’ve made this far. You managed to buy a top server for AI processing, got yourself some 8 or 16 A100 GPUs sitting on a cool Nvlink interface blazing at light speed and connected to your impressive 200gbps infiniband mellanox interconnects.

Now, you hook that baby to an NFS server and have experience the same feeling of buying a ferrari (literally, since these servers are sometimes more expensive than a ferrari) and being forced to drive it through a 5 mile 12 hour traffic jam. You’d be better off driving a Moped!

If you’re entering the AI game, understand that Storage speed can’t be neglected. Don’t overlook your network storage. Don’t overlook your local storage. Don’t overlook your memory speed. All of those can become a serious bottleneck and will kill the performance of your ferrari.

5 – Managing the Cluster

Now that you managed to wrangle the servers, network, and storage, congratulations! You finally earned the right to face the next challenge: managing the cluster. I’ll keep it simple here, but don’t construe this as it being a simple process. Managing your cluster involves dealing with cyberthreats (from inside and outside), having an efficient task distribution system, implementing a monitoring system, manage all the additional services needed, and physically tending to the hardware when it fails.

6 – Adequate Infrastructure

None of the above will go too far if you’re running your servers from a broom closet, or from the kitchen as we once tried in the beginning or our journey several years ago.

Here is what is going to happen: as soon as you manage to run your cluster on all pistons, it will overheat and begin to throttle in order to protect the hardware from a literal meltdown.

Without a proper infrastructure, you can’t guarantee access control, adequate power supply, adequate cool

ing, or adequate fire suppression system. Creating the proper environment for your servers is not an easy task, and it isn’t cheap either.

7 – Experimentation Infrastructure

If you’ve got it all right, not it is time for you to start running all of those experiments and perhaps automating some of it. With a fine tuned supercomputer, you don’t want to waste any cycles with inefficient processes.

Here at Amalgam, we use our proprietary automation system called Mercury to keep the cluster at 100% capacity while maximizing the amount of experiments we run in a short period of time.

We’ve heard accounts from some of our partners of customers purchasing a DGX server from Nvidia and running Jupyter notebooks on it. Please, don’t. Will it work? Sure. But all you’ll do is use Jupyter, stick to a desktop computer and you’ll experience a comparable performance for much cheaper.

8 – Deploy your Models

You did it! You wrangled all of these challenges and now you have your very own State of the Art AI model ready to rock in a real world application. Deploying this model in a scalable manner in production is your final big challenge. In most cases, you won’t need a GPU in order to use the model, but you’ll have to learn how to deal with multiple requests, load balancing across different instances, and keep latency low enough to make all of this worthwhile in production. For most applications, it is perfectly ok to serve your model from the cloud, and since it won’t require the same hardware you used to train it can actually be quite economical to do it that way.